Crisis Scenario: When AI-Driven Hiring Assessments Go Wrong

11:13

Cover photo by Brey on Unsplash

Mobley v. Workday, a potential U.S. Federal class action lawsuit, brings into focus the potential risks and rewards inherent in AI-driven talent acquisition. To consider these challenges, let’s examine a crisis scenario that connects facets of real-world concerns about artificial intelligence driven hiring assessment.

Imagine: Large Tech Corporation faces 50 Million Class Action over Hiring Discrimination

Sarah Chen, CHRO of TechCorp, received the call that every HR executive dreads. Their AI-powered hiring platform had just been hit with a federal class action lawsuit alleging systematic discrimination against candidates over 40, minorities, and individuals with disabilities.

Photo by ZBRA Marketing on Unsplash

The plaintiff, Marcus Rodriguez, a 45-year-old software engineer with a mild hearing impairment, claimed he was automatically rejected from over 80 positions within TechCorp’s system over two years—often within minutes of applying. His legal team had data showing a pattern: zero candidates over 40 with disclosed disabilities had been advanced past the initial AI screening in the past 18 months.

TechCorp had implemented “HireFast AI,” a cutting-edge algorithmic screening tool that promised to:

- Reduce time-to-hire by 70%

- Process 10,000+ applications daily

- “Eliminate human bias” through machine learning

The system analyzed resumes, social media profiles, and video interview responses using natural language processing and facial recognition technology. It seemed like the future of hiring—until it became a legal nightmare.

What can be achieved with an evidence-based assessment? Take a complimentary development profile to find out.

How the AI Hiring Assessments Failed

A deeper investigation revealed several critical flaws:

1. Biased Training Data

- The AI was trained on historical hiring data from TechCorp’s existing (predominantly young, male, non-disabled) workforce.

- Because of this limited data for learning it replicated past discrimination patterns instead of eliminating them.

2. Problematic Assessment of Potential

Because the process let artificial intelligence make decisions without human review broad misinterpretations of potential took place, limiting the applicant pool without awareness by HR, recruiting, or hiring managers.

- Employment gaps (often due to caregiving responsibilities or disability-related issues) triggered automatic rejections.

- The system penalized candidates who disclosed accommodations needs.

- Graduation dates became age proxies, systematically filtering out older candidates

3. Hiring Decisions Unrelated to the Job

The goal of assessment in hiring is to make decisions driven by job-related criteria. The unchecked artificial intelligence in this case led to several significant consequences for the business.

- There was no human oversight why candidates were rejected, or move forward by the AI tool.

- The organization was put in the difficult position of not knowing why candidates were rejected.

- Fairness, and legal defensibility, were called into question with no audit

The Legal Avalanche of Unvalidated and Unchecked AI Assessments in Hiring

The lawsuit alleged racial and age discrimination as well as discriminatory decision-making against people with disabilities. Potential damages reached to the tens of millions, but the reputational damage was immediate and immeasurable. Within 48 hours:

- Stock price dropped 12%

- Three major clients paused partnerships

- #TechCorpDiscrimination trended on social media

- The EEOC announced a formal investigation

Why the Empirical Approach to Hiring Assessments by Corvirtus Has Never Faced Such Challenges

With that realistic, but hypothetical, case in mind, let's take a look at how we've supported our clients in avoiding legal scrutiny for our forty-plus year history.

The Fundamental Difference: Evidence-Based vs. Algorithm-Based

While AI hiring tools like those in the Mobley v. Workday case face increasing legal challenges, experienced assessment providers like Corvirtus have maintained clean legal records through fundamentally different approaches.

We begin by:

- Gathering subject matter expertise through interviewing incumbents and supervisors to create competency models and frameworks that clearly define the job, culture, and what success looks like.

- “Testing the tests” through ongoing, and organization-specific, validation studies showing scores relate to measurable performance outcomes. We use incumbent assessment and performance data to set the job-related benchmarks that determine how the assessments influence applicant decision-making.

- Looking at the entire hiring process and how the assessments can best support decisions at each step of the process, including how they can support the development and success of new hires.

If we compare this to AI systems that operate independently, they:

- Are often trained on historical data from other organizations without a job analysis, or validation.

- May loosely, or not at all, collect actual data on job performance specific to your organization, candidates, and new hires.

- Are at risk of perpetuating existing biases because of reliance on training data without careful observation.

Preventing Bias in Hiring Assessments

Corvirtus Safeguards for Hiring

- As we shared earlier, any of our assessments are validated before they are used in hiring decisions. Part of the validation process includes determining that the assessments do not adversely impact any protected class. On top of this, we proactively monitor assessments for each organization and position.

- Further, by building assessments that measure core competencies we also reduce the likelihood that protected classes, like age, gender, or disability, might be used in decision-making. Assessments equip decision-makers with job pertinent information that makes our biases (even, "I've had trouble working with people who worked at his prior employer in the past), less compelling.

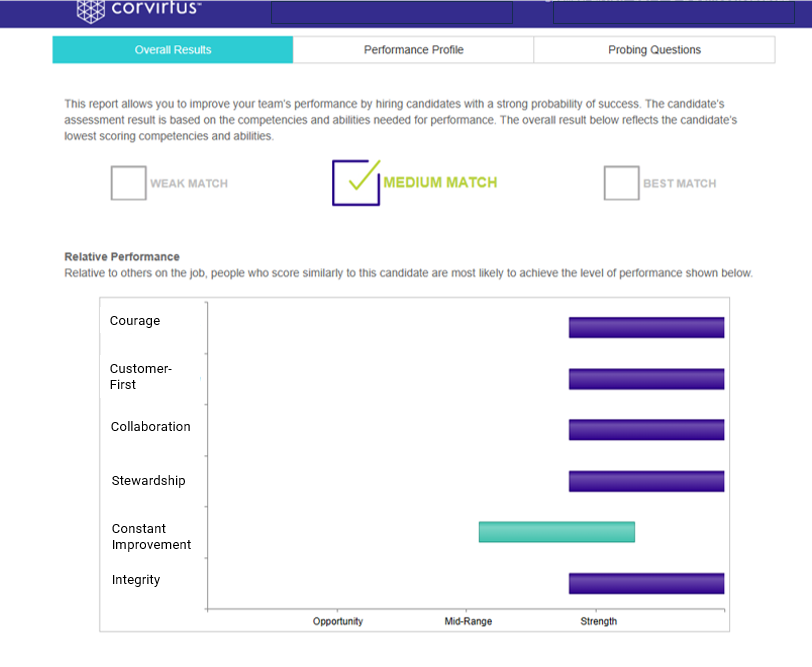

- Further, our assessments deliver information on the same core competencies, abilities, and job-related traits on every candidate. Decision-makers receive information on the same set of competencies and abilities with clarity on what's driving the overall assessment result.

Legal Defensibility by Design: Why Does This Matter?

- Our evidence-based approach to assessment makes a clear job-related case to refute any claim of discrimination.

- Hiring assessments driven by independent artificial intelligence may lack validation, vary how candidates are evaluated, and the clarity and consistency of information shared with hiring managers.

- Corvirtus assessments have data and documentation (e.g., validation reports, distributions of scores, EEO information) ready to evaluate on an ongoing basis and in the event of any question of discrimination.

Transparency and Human Oversight in Hiring Assessment

Our approach to psychometric assessment in hiring simply makes things easier. In part this is because of greater transparency. This means -

- Clear, interpretable results that hiring managers can understand.

- Assessment results designed with hiring managers in mind. While we value (and highly recommend!) training for anyone using the reports, they are easily understood without much background. Training usually focuses on maximizing information for interviewing, making hiring decisions, and supporting new hire success.

- As you can see in the assessment result above, information is consistently tied to your core competencies and the traits needed for the role. The behavioral interview questions that are populated depend on the candidate's relative strengths and vulnerabilities. Narrative information describes how the candidate will likely contribute to the organization and how to maximize their performance.

Let's compare this to a common pitfalls in an AI driven hiring process.

- Decisions are based on algorithms that are consulted after the fact and may be difficult (or impossible) to explain.

- Human oversight of decisions is difficult and/or inconsistent.

- There's difficulty proving the job-relatedness and consistency of the criteria set by he AI.

What's Validation? Should it be Industry or Organization Specific?

Corvirtus assessments are validated within industries and by organization to ensure accurate prediction of performance. This replaces generic algorithms that may not account for role-specific requirements. This means that every assessment is tailored to the unique competencies and cultural requirements of the organization.

Practical Applications + Lessons for Hiring Assessment Tools

If you're using AI driven hiring tools -

- Audit your AI systems now. Is there adverse impact? Are decisions made on job relevant criteria? Don’t wait for a complaint or lawsuit.

- Implement human oversight. Ensure algorithmic decisions can be (and are) reviewed and explained regularly.

- Monitor for adverse impact. Regularly analyze outcomes by protected class.

- Document job-relatedness, - Ensure all selection criteria tie directly to job requirements. Evaluate how assessment tool performance and job performance are related.

For Those Considering Hiring Technology:

Consider these questions for due diligence -

- Has this tool been validated for our specific industry and roles? How?

- Can the vendor provide evidence of job-relatedness?

- What adverse impact monitoring is included?

- How transparent are the algorithmic decisions?

- What legal protections does the vendor provide?

- What is the track record for successfully building the key results you're seeking (e.g., performance, retention, culture)?

To guide your assessment search, check out our Excel-based tracking sheet of questions to ask and areas to consider when selecting any hiring assessment.

Maximizing Hiring Assessment Tools for the Bottom Line and a Hiring Process with Stronger Legal Defensibility

The difference between TechCorp’s crisis and our approach and record isn’t about technology versus tradition—it’s about methodology, validation, and accountability.

Evidence-based hiring assessments provide detailed information on job-related traits for hiring managers, while black-box AI systems often can’t explain their decisions.

When organizations build hiring processes around established competency models that clearly define what success looks like, they create both better outcomes and stronger legal defensibility.

2025 is expected to be the year the floodgates open with a swell of AI-related legal complaints. The traditional approach of validation-first, evidence-based assessments offers not just legal protection, but better hiring outcomes.

The question isn’t whether your hiring technology is sophisticated—it’s whether it’s consistent, job-related, and legally defensible.